Last week, the Washington Post reported that Google, Microsoft, and Meta are training their AI models on users’ conversations, documents, and photos.

The week before that, TechCrunch reported that X, formerly known as Twitter, updated its privacy policy to disclose the use of public data for AI training and machine learning.

With conversations surrounding data privacy getting louder in recent months, consumer concerns are growing even bigger.

The State of Data Privacy

Companies using data to train AI isn’t new.

When you get recommendations on your Netflix account or suggested products on Amazon, those are examples of trained algorithms.

Here’s what’s different today: In the past six months, companies have been in a race to collect as much user data as possible to gain the AI edge over the competition.

In some cases, the data collected is confidential and sensitive, which the AI model has the potential to spit back out. So, not exactly reassuring.

U.S. lawmakers like Rep. Jan Schakowsky blame the AI boom for increased data privacy violations “as companies vacuum up any data they can to monetize using AI models.”

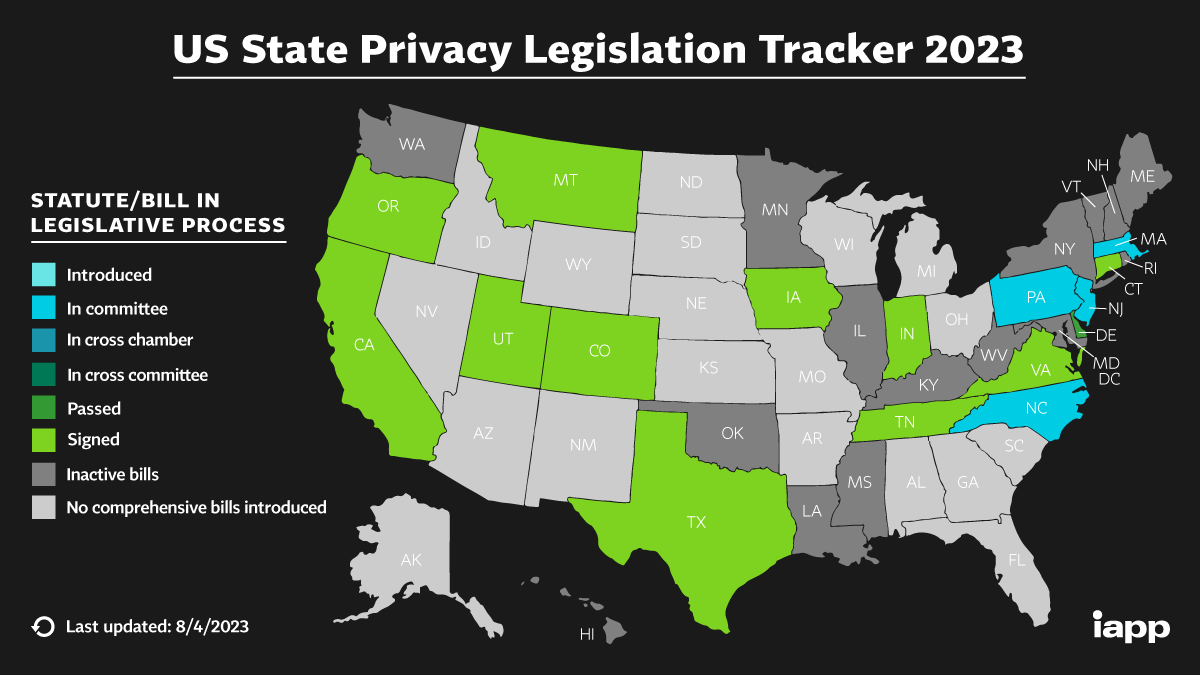

To make matters worse, the U.S. doesn’t have universal, overarching data privacy laws. Instead, they have laws that pertain to specific sectors and populations – like the Health Insurance Portability and Accountability Act (HIPPA) and the Children’s Online Privacy Protection Rule (COPPA).

In contrast, the EU has the General Data Protection Regulation (GDPR), a comprehensive law implemented in 2018 that outlines strict data collection guidelines and large fines for orgs that violate them.

Today, here’s where we stand: Only 11 U.S. states, including California, Texas, and Tennessee have comprehensive data protection laws, with most going into effect in the next two years.

Where is data privacy headed?

Etienne Cussol, compliance analyst at Termly, says AI has reshaped the relationship between organizations and data protection.

“AI models’ reliance on massive amounts of data to learn and to generate is at complete odds with some of the core principles of data privacy, such as data minimization, purpose limitation, or privacy by design and by default,” he says.

But, how can brands make meaningful advancements while managing user privacy concerns?

Cussol says it starts with offering users transparency and control.

“Businesses seeking to implement a new AI system should prioritize understanding the impacts it would have on their organization’s data flow, to provide their users with clear and transparent notices and to create accessible and specific control mechanisms,” he says.

Transparency is a large piece of the puzzle. Take Zoom, for example, who took a big hit when they updated their terms of service (TOS).

In March, the language in their TOS suggested that Zoom could broadly use customer content to train its AI models. In early August, word spread on Twitter.

Well time to retire @Zoom, who is basically wants to use/abuse you to train their AI https://t.co/b6dNCO77aY

— Gabriella "Biella" Coleman (@BiellaColeman) August 6, 2023

“Consumers are absolutely right to look into how their personal data is being used in our increasingly connected world and to push for more accountability,” says Cussol.

Following the backlash from users and privacy advocates, Zoom got into damage control mode and rectified its terms. The TOS now states that no customer data (including audio, video, polls, attachments) will be used to train its AI models without prior user consent.

Zoom was just one instance. In many cases, users are either unaware of how their data is being used or don’t have the option to opt out.

So where does this leave us? Waiting for more regulation.

According to a Politico article, tech lobbyists are hoping for a comprehensive federal AI law. The worry is that each state creating its own bill will lead to a “nationwide patchwork of inconsistent laws,” which will slow down innovation and create a host of compliance issues.

In the meantime, Cussol’s takeaway for brands is to view data privacy as a market demand in addition to a regulatory one.

Those looking to join the rat race can preserve trust with this approach while they strive for innovation.